The finale: cost optimization in the cloud

by Oshrat Nir on Jul 1, 2021

Thanks for getting this far with us. By now you will have learned that moving workloads to Kubernetes and the public cloud will not necessarily get you to the cost-saving goals you were hoping for. You have also seen that each cost driver can be tweaked to get you to optimal spend.

👋 ICYMI: Catch the previous articles in this series: Intro, Part 1, Part 2.

In this installment of the series, we will be talking about trade-offs — because sometimes you have to give a little in order to make gains. We will recommend some additional tooling that is related to cost optimization and we will have a look at cost allocation, since it may be relevant to your organization.

Tradeoffs

Kubernetes offers instance flexibility. On the other hand, the cloud providers incentivize you to get reserved instances, with savings plans. This can hurt said flexibility and may not even end up costing less due to over-provisioning. It's a fine line to walk to get things right-sized.

As mentioned earlier, autoscaling is a powerful tool to help to accommodate different and changing traffic patterns helping to reduce compute resources to the minimum. However, it comes with a lack of control. You don’t know what will happen if your application gets a sudden surge in traffic when you combine different types of automated scaling. Therefore, it requires trial and error to set up and continual tweaking to keep things running as efficiently and reliably as possible.

Isolation and reliability are based on namespacing and container isolation. You get clear boundaries that you can rely on. But there are still some shared components that you have to decide how to segment. Having an instance of Ingress Controller per namespace defeats the purpose of utilization but makes the team responsible for its performance. Finding balance is the key here.

In theory, the scalability and power of Kubernetes allow you to create as many clusters as you like. Cluster sprawl or size will affect the size of the infrastructure that you use. So, while even if you can charge the costs back to the teams, these are costs that need to be accounted for and can possibly be minimized.

Kubernetes and especially managed Kubernetes have created a low barrier of entry for those interested in trying it out. Once a pet project is ready to go out into the world as production-ready, a steep learning curve appears. It has to do with learning and understanding all the available features and applicable scenarios. It also requires knowledge of real-world use-cases that apply to production-grade applications. The huge ecosystem that supports Kubernetes and cloud-native in general, offers numerous tools to help you out with many things. That being said, every tool introduced adds a level of complexity to your setup. You need to maintain tools and keep them up to date. So even when it comes to tooling there's a fine line between the problem they solve and the cost they create. Be aware of the things that you put in your clusters.

Through 2024, nearly all legacy applications migrated to public cloud infrastructure as a service (IaaS) will require optimization to become more cost-effective. — 4 Trends Impacting Cloud Adoption in 2020, Gartner

Recommendations for cloud-native tooling

As mentioned earlier, Kubernetes is a large ecosystem that provides many adjacent tools to solve many problems. In this instance, we will focus on some cloud-native tools that can help you with cost optimization.

Observability stacks

There is a huge number of available observability and monitoring stacks. Most of them are moving in the same direction. They allow you to track the consumption of resources. These include compute, storage and network. Please note that we are discussing a stack, so you should expect to have more than one tool in there.

Cost management tools

There are some nice solutions out there. They do the work of correlating cloud provider resources like deployment spots or persistent volumes and map those to cloud provider expenses. These may be a better option than trying to create a homegrown solution to achieve the same end. These tools typically take advantage of labeling and give you insight into teams or projects that are wasting resources. Here we can mention Kubecost, Cloudability,or replex.

kboom

This is a cloud-native tool that lets you perform load testing on your applications. It provides insight into how your applications behave with increased traffic. It supports other scenarios that might be applicable. Based on this information you can set resources.

Goldilocks

This is essentially a vertical pod autoscaler, but with an interface. It has a useful dashboard that allows you to pinpoint the ideal resources for your application. You can then apply them to the application manifest.

KEDA

KEDA is a scheduler based on events. It spawns applications based on the number of events. As a result, you do not have idle workers waiting for events.

Janitor and Kubedownscaler

These tools are useful for developing and studying scenarios. They can help you decide to scale down all the deployments in your cluster or clean up a deployment after a period of time.

Cost allocation

Kubernetes gives you the option of tagging all the Kubernetes entities thanks to the labeling system. Most cost allocation solutions, like Kubecost or Cloudability, are aware of labeling to distribute costs by team or environment. This helps charge back application resource costs directly to the owners of the applications following the FinOps principles. As a result, costs can be related back from a namespace to a team, adding the cost as another variable available to consider within the DevOps lifecycle.

In terms of shared resources, like ingress controller or DNS server, it is a bit more difficult to account for them and split their cost between teams. The same applies to managed services like a common database used for multiple tenants in the cluster.

Summary

Cost optimization for Kubernetes is not a one-and-done situation. There are many techniques and tools that you can apply to this undertaking. An important piece of this is also experience. If you would like to work with an experienced provider of managed Kubernetes, contact us at Giant Swarm.

You can also find additional resources in the Giant Swarm YouTube channel, and webinar archive.

You May Also Like

These Related Stories

Introduction to cost optimization in Kubernetes

Introduction The potential for cost saving is often one of the critical factors when deciding to move to open source and the cloud. Now, in the wake o …

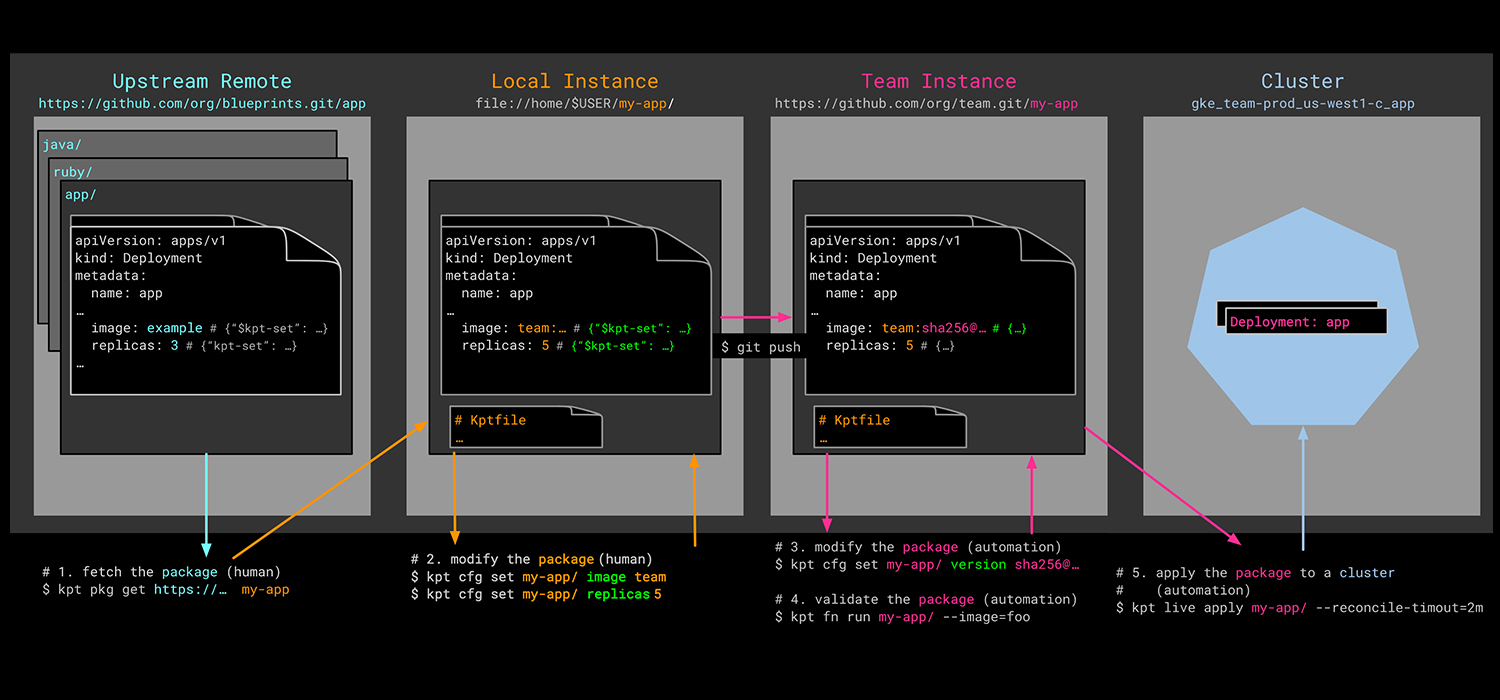

Application Configuration Management with Kpt

Through the course of this series on application configuration management in Kubernetes, we've looked into many of the popular approaches that exist i …

Application Configuration Management with Pulumi

As this series on application configuration management in Kubernetes has progressed, the predominant message that has emerged is the community's desir …