Monitoring On-Demand Kubernetes Clusters with Prometheus

by Joe Salisbury on Feb 27, 2018

Monitoring our infrastructure is of paramount importance at Giant Swarm, as our customers rely on us to provide fully-operated clusters that power some of the world’s largest companies.

One of the major tools in our observability suite is Prometheus, which we use to power a large portion of our monitoring and alerting.

Giant Swarm allows you to create as many Kubernetes clusters as needed, both in the cloud and on-prem. The high-level architecture has two areas. A ‘host’ Kubernetes cluster, which runs our API services, operators, and monitoring - including Prometheus. The host cluster can then create many ‘guest’ Kubernetes clusters, via our API. These guest clusters run the actual customer workloads.

Monitoring Kubernetes clusters with Prometheus is straightforward and well documented. However, we have a unique problem definition - we need to monitor the on-demand Kubernetes clusters that our platform is managing.

Networking and Peering

On AWS, each guest cluster runs in its own VPC, possibly in a separate account. We peer the host and guest cluster VPCs. This allows the Prometheus server running in the host cluster to access the guest cluster components, such as the Kubernetes API server. We also make sure that each guest cluster CIDR block does not overlap with the host cluster or other guest clusters, to allow for this peering. This is done similarly in Azure, to provide an equal level of monitoring.

Custom Service Discovery

We decided to build a custom service discovery for Prometheus. Our use case is pretty specific, so upstreaming it provides questionable value. We are open-source by default, so any learnings are public.

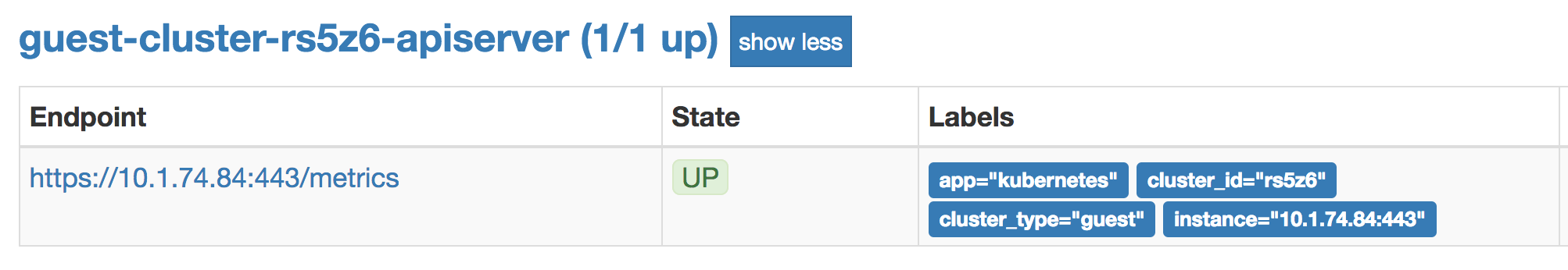

Our operators that manage guest Kubernetes clusters on each provider - the aws-operator, azure-operator and kvm-operator - are responsible for setting up a Kubernetes Service in the host cluster that resolves to the guest cluster Kubernetes API server. These Services are labelled specially for our custom service discovery.

Our custom service discovery - the prometheus-config-controller - runs in the same Pod as Kubernetes. This is built as a Kubernetes operator using our operatorkit framework, and follows the general pattern of reconciling actual state towards desired state.

The prometheus-config-controller needs to take care that both the Prometheus configuration is correct - that it has configuration for scraping each of the guest clusters, and also that the certificates to access the guest cluster are available.

It does so by listing the specially labelled Services in the host cluster (that point to guest cluster Kubernetes API servers), and writing a Prometheus Job for each guest cluster. This makes use of the Prometheus Kubernetes service discovery to discover Kubelets and other targets. We use the Service as the Kubernetes API server DNS name.

We generate a certificate for Prometheus to access each guest cluster from Vault, and the prometheus-config-controller takes care that this certificate is read from a Kubernetes Secret and written to a Volume that is shared between the prometheus-config-controller and Prometheus itself.

When changes are detected between the ConfigMap and certificates, and what Prometheus has loaded as its current configuration, the prometheus-config-controller reloads Prometheus.

Full Power

We have been using guest cluster scraping in production for over two months now with no major issues, excluding having to scale our Prometheus servers with the increased number of metrics.

We now have a greater operational knowledge of the state of our guest clusters, as well as being able to align our alerting between host and guest clusters. This allows us to provide a better product and service for our customers. Huzzah!

You May Also Like

These Related Stories

Scaling on-demand Prometheus servers with sharding

It’s been a few years since we last wrote about our Prometheus setup. I’d recommend reading that article for context first, but for those looking for …

Securing the Configuration of Kubernetes Cluster Components

In the previous article of this series Securing Kubernetes for Cloud Native Applications, we discussed what needs to be considered when securing the i …

Self Driving Clusters - Managed Autoscaling Kubernetes on AWS

Giant Swarm provides managed Kubernetes clusters for our customers, which are operated 24/7 by our operations team. Each customer has their own privat …