The Graphic Guide to Evolving Cloud-native Deployments

by Oshrat Nir on Feb 6, 2020

This blog post was inspired by a talk given by Timo Derstappen, Giant Swarm Co-Founder and CTO.

Kubernetes was invented in order to make developers’ lives simple. Indeed, getting started with Kubernetes is as easy as installing Minikube, connecting to kubectl and you’re ready to go. Sure, this is the case if you are working on a pet project, but not if you want to take it into production and run Day 2 operations.

You’ll have multiple teams focused on their objectives and doing their best to get there. As it often happens in enterprises, these teams aren’t necessarily heading in the same direction at the same pace.

The change from an easy Kubernetes installation to a gridlocked environment happens gradually as you mature in your cloud-native journey.

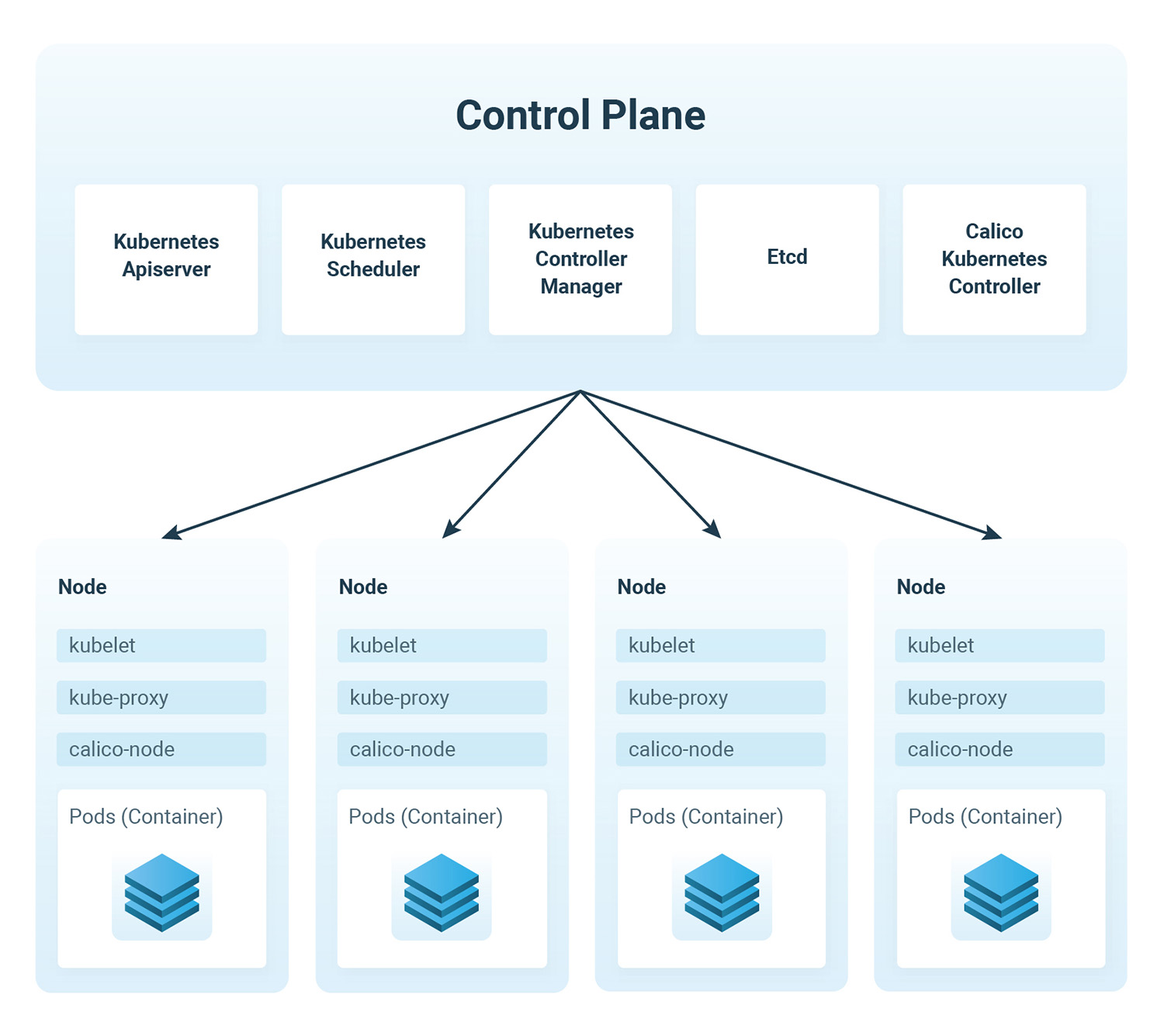

Your ‘Day 1’ installation looks something like this:

For ‘Day 2’ and beyond you’ll find that you have started building a cloud-native stack.

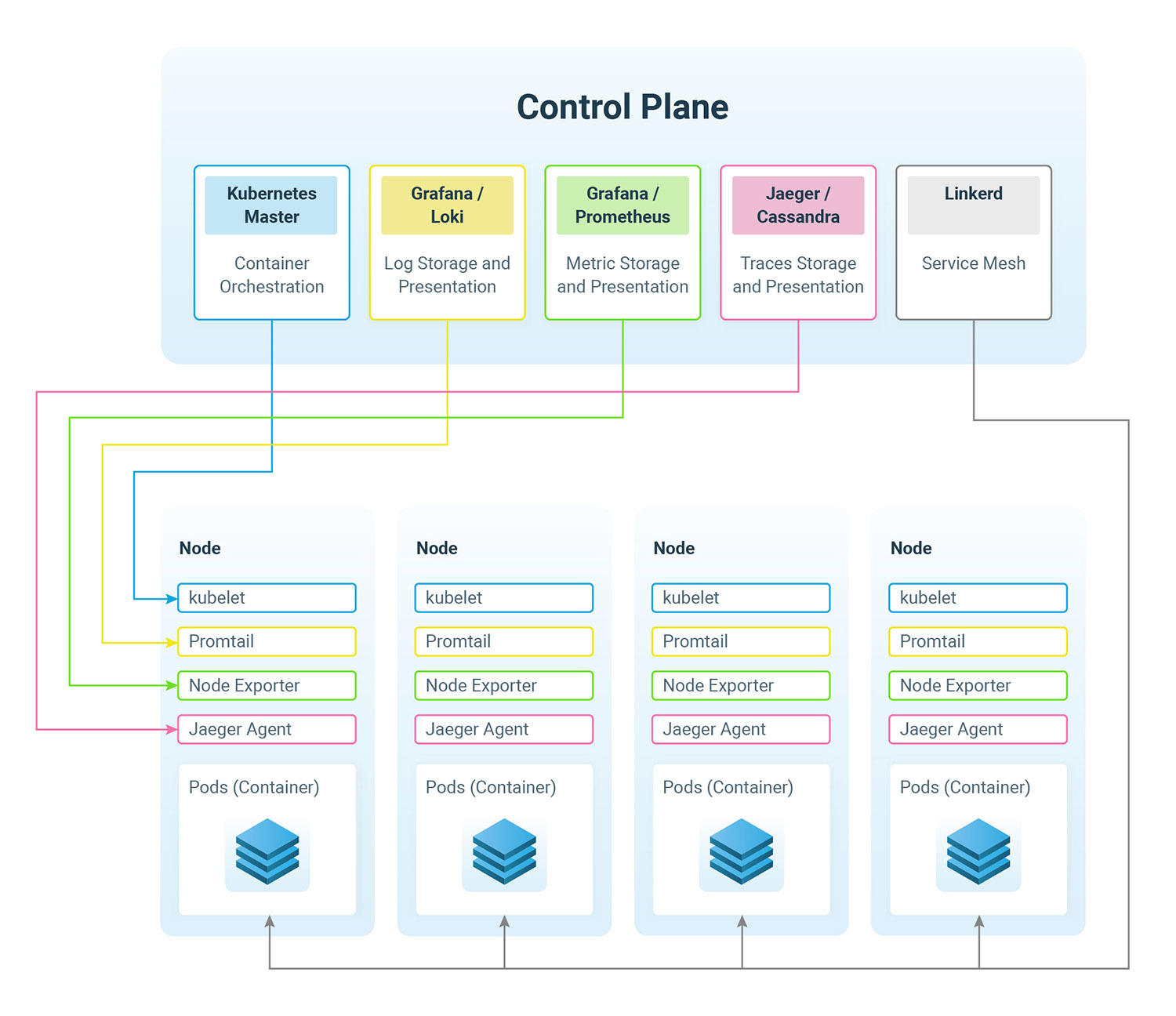

The result will look something like this:

It’s bigger but it’s still manageable.

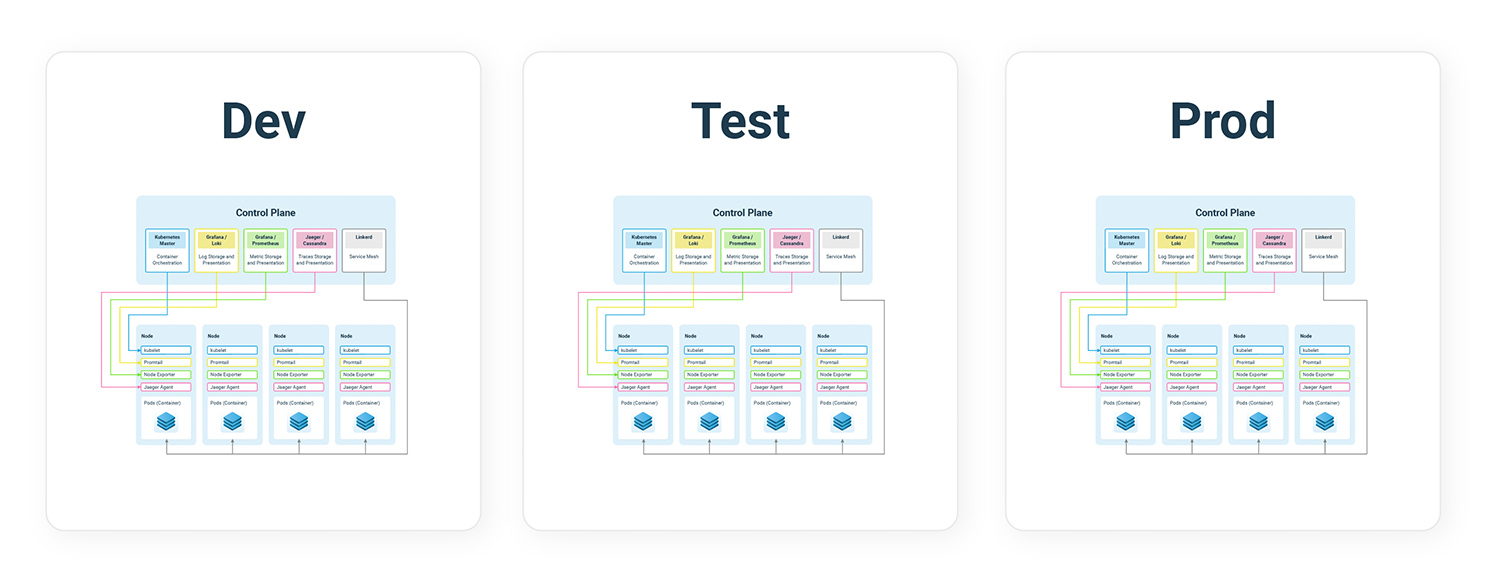

The thing is, best practice indicates the need for multiple environments to build robust software. So suddenly you need duplicates of the stack for different environments.

You’ll find it looks something like this:

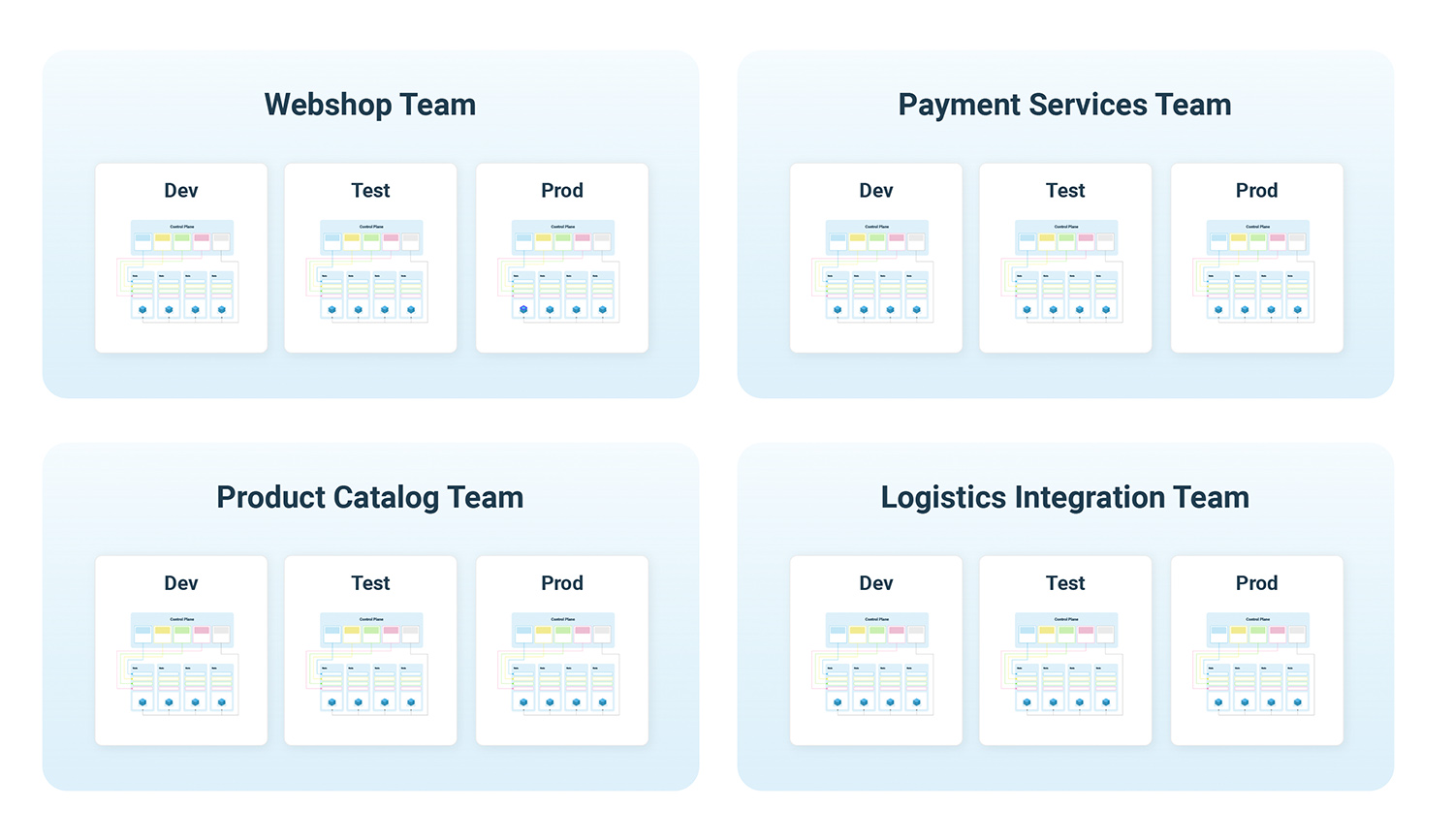

Truth be told, this is just for a single development team. In cases where you have multiple development teams, the solution is copying this per team or department.

It’ll then look something like this:

But what about regulation, cost, high-availability, latency, and resilience? These considerations may push you towards a multi-region or even a multi-cloud solution.

As a result, you’ll end up with something like this:

So bit by bit, on your cloud-native journey, you discover that the business agility you are aiming for requires you to take care of multiple environments.

All of this is just to keep your teams independent when it comes to finding the best time to do an upgrade or a launch, etc. The problem is that all of this complexity needs to be managed.

Tools and teams to manage this are a solution. At this point, some people in your organization may start wondering if infrastructure has become your main business. We have a better solution.

A solution where we run your infrastructure, so you can run your business.

Giant Swarm is a team of highly experienced individuals. Our mission is to create and run the best platform for you. We run hundreds of clusters and are continually improving the platform on which they run.

Running so many clusters in production gives us an advantage. Once we identify a problem, we implement the solution to all of our customers. Regardless of whether they discovered this problem (yet) or not.

It’s not just latent knowledge I am talking about. Knowledge is continuously codified and implemented into our product. This is based on operators. These operators, built with Kubernetes, continually check the desired state of each cluster. They are a central tool for keeping the infrastructure in the desired state.

Less manual work = less human error

The bottom line is that complexity is not going away. Complexity is just shifting towards infrastructure to make developers faster. Building your own platform will just make you slow. If you would like to achieve business velocity, we can help by running your infrastructure for you.

You May Also Like

These Related Stories

Cloud-Native Predictions for 2020

Two years ago, I wrote predictions about Kubernetes and was dead on, which obviously means I was completely motivated to write another one, entitled “ …

Issuing Certificates for Kubernetes with cert-operator using Vault and OperatorKit

At Giant Swarm our Giantnetes (G8s) platform runs Kubernetes within Kubernetes and is built using microservices and operators. You can read more about …

Why Is Securing Kubernetes so Difficult?

If you’re already familiar with Kubernetes, the question in the title will probably resonate deep within your very being. And if you’re only just gett …