Container Image Building with BuildKit

by Puja Abbassi on Jan 30, 2020

In the final article in this series on the State of the Art in Container Image Building, we return to Docker’s Moby project where it all started and a sub-project called BuildKit.

BuildKit is the second-generation image builder provided by Docker’s Moby project and is available for use since Docker CE 18.09. As we saw with the Img builder in an earlier article, BuildKit is not limited for use with Docker alone. It’s a general-purpose image build capability that can be consumed as a standalone binary (in daemon or daemonless mode) and as a library. In fact, BuildKit can be used to build any artifact (not just container images) provided the build steps can be translated into its Low Level Builder (LLB) representation. We’re primarily concerned with container image building here, so let’s see what BuildKit brings to the party.

Build Step Optimization

One of the frustrations most often leveled at the original build backend provided by Docker is the sequential nature of build step execution for Dockerfile instructions. After the introduction of multi-stage builds, it has been possible to group build steps into separate, logical build tasks within the same Dockerfile.

Sometimes these build stages are completely independent of each other, which means they could be executed in parallel — or need not be executed at all. Unfortunately, the legacy Docker image build experience doesn’t cater for this flexibility and sometimes performs build steps when it doesn’t need to. This means that build times can often be longer than is absolutely necessary.

In contrast, BuildKit creates a graph of the dependencies between build steps and uses this to determine which elements of the build can be ignored; which can be executed in parallel; and which need to be executed sequentially. This provides for much more efficient build execution, which in turn is valuable to developers as they iterate over image builds for their applications.

Efficient and Flexible Caching

Whilst the caching of build steps in the legacy Docker image build is extremely useful, it’s not as efficient as it could be. As a rewrite of the build backend, BuildKit has improved on this and provides a much faster and more accurate caching mechanism. It uses the dependency graph generated for an image build and is based on instruction definition and build step content.

Another huge benefit provided by BuildKit comes in the form of build cache importing and exporting. Just as Kaniko and Makisu allow for pushing a build cache to a remote registry, so does BuildKit. However, BuildKit gives you the flexibility to embed the cache within the image (inline) and have them pushed together (although not supported by every registry), or to have them pushed separately. The cache can also be exported to a local directory for subsequent consumption.

The ability to import a build cache comes into its own when a build environment is established from scratch with no prior build history to benefit from. Importing ‘warms’ the cache and is particularly useful for ephemeral CI/CD environments.

Build Artifacts

When an image is built using the legacy Docker image builder, the resulting image is added to the cache of local images managed by the Docker daemon. A separate docker push is required to upload the image to a remote container image registry. Once again, the new breed of image building tools enhance the experience by allowing you to specify an image push at the time of build invocation. BuildKit is no exception, and also allows for image output in several different formats; files in a local directory, a local tarball, a local OCI image tarball, a Docker image tarball, a Docker image stored in the local cache, and a Docker image pushed to a registry. That’s a lot of formats!

Extended Syntax

One of the many feature requests that is frequently repeated for the docker build experience, is the safe handling of secrets that are required during image builds. The Moby project resisted this call for a number of years. But, with BuildKit’s flexible ‘frontend’ definitions, an experimental frontend is provided for Buildkit which extends the Dockerfile syntax. The extended syntax provides useful additions for the RUN Dockerfile instruction, with security features among them.

RUN --mount=type=secret,id=top-secret-passwd my_command

A Dockerfile that references the experimental frontend can temporarily mount secrets for a RUN instruction. The secret is provided to the build using the --secret flag for docker build. Similarly, using the ssh mount type enables the forwarding of SSH agent connections for secure SSH authentication.

Having the ability to extend the de-facto Dockerfile syntax in this way is unique to BuildKit.

Consuming BuildKit

BuildKit has a host of other features that considerably improve the craft of building container images. If it’s a general-purpose tool fit for a number of different environments, how can it be consumed?

The answer to this question is varied, depending on the environment in which you’re working. Let’s have a look.

Docker

Given that BuildKit is a Moby project, it will come as no surprise that it can be used as the preferred build backend with Docker (v18.09+). It’s not yet the default backend as it’s not supported on the Windows platform, but it’s easy enough to turn on when building images on Linux.

Simply setting an environment variable (DOCKER_BUILDKIT=1) does the job, or add the following key/value pair to the daemon’s config file for permanent use; "features":{"buildkit": true}.

In this configuration, Docker doesn’t quite expose the full power of BuildKit due to some current limitations in the Docker daemon. For that reason, the Docker client CLI has been extended to provide a plugin framework, which allows plugins to be used to extend the CLI functions available. An experimental plugin called Buildx bypasses the legacy build function in the daemon and uses the BuildKit backend for all builds. It provides all of the familiar image building commands and features but augments these with some additional features which are BuildKit-specific.

BuildKit, and by extension Buildx, supports multiple builder instances. This is a significant feature that is not mirrored in the other image build tools in the ecosystem. It effectively means that a farm of builder instances can be shared for building purposes; maybe one project is assigned one set of builder instances, whilst another gets a different set.

$ docker buildx ls

NAME/NODE DRIVER/ENDPOINT STATUS PLATFORMS

default * docker

default default running linux/amd64, linux/386

By default, the Buildx plugin targets the docker driver, which uses the BuildKit library provided by the Docker daemon with its inherent limitations. An alternative driver is docker-container, which transparently launches BuildKit inside a container for performing builds. It can provide the full features available in BuildKit. A third driver, for Kubernetes, enables builder instances of BuildKit running in pods to be the target for image builds. This is particularly interesting, as it enables builds to be initiated for BuildKit running in Kubernetes — all from the Docker CLI. Whether this is a desirable workflow to implement or not is entirely a matter of individual or corporate choice.

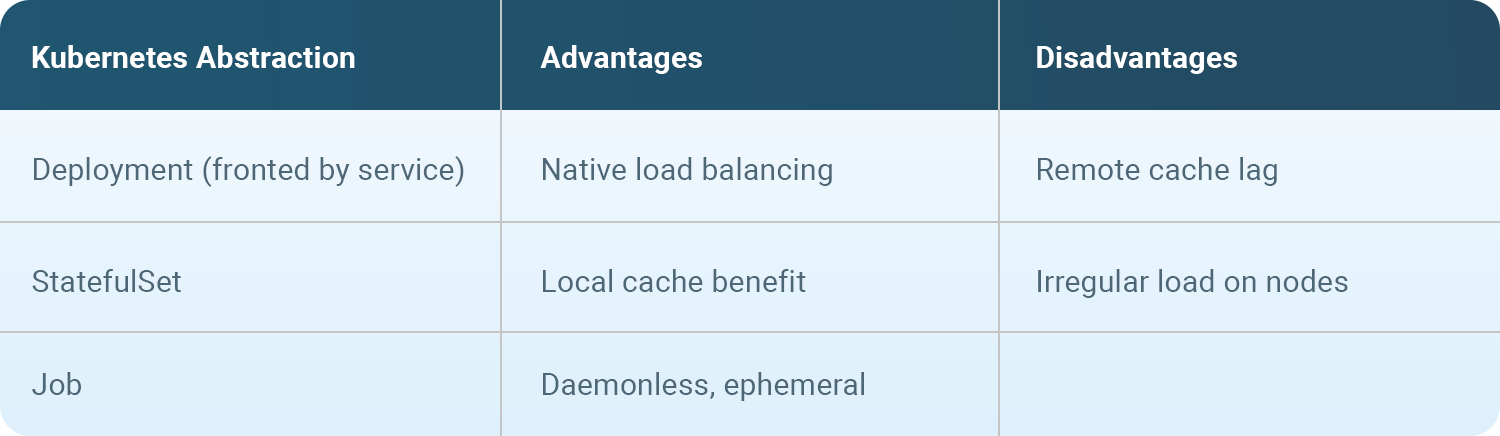

Kubernetes

Increasingly, organizations are implementing their application workflows on top of ephemeral infrastructure. This includes Kubernetes, and it’s common to see container image builds occurring in pods as part of CI/CD workflows. When it comes to running BuildKit instances in Kubernetes, there are a number of different configurations available. Each deployment strategy has its advantages and disadvantages and each will suit different purposes.

In addition to the developer-oriented builds initiated for BuildKit using the Docker CLI, builds can also be triggered by a variety of CI/CD tooling. Container image builds using BuildKit can be executed as a Tekton Pipeline Task, for example.

Conclusion

This article doesn’t have the space to cover some of the other features that BuildKit provides, such as multi-platform images and Rootless image builds. However, it provides a flavor of the many significant improvements that BuildKit brings to container image building.

Whereas a number of the new generation of build tools have sought to alleviate the problems associated with the traditional build process, BuildKit has sought to go a step further and to be innovative instead.

Of course, it’s early days for BuildKit, and some features need to mature and evolve with community adoption. Having been constrained to an adequate but imperfect container image build experience for so long, it may be a little bewildering when faced with the choice that’s available today. Some people will inevitably make their choice based on affiliation; Buildah for Red Hat, Kaniko for Google, or BuildKit for Docker. But, it’s certainly true to say that the job of container image building has been made much easier by the different options available today. Happy image building!

You May Also Like

These Related Stories

Building Container Images with Img

Img is an open source project initiated by one of the most famous software engineers in this space, Jessie Frazelle, in response to the demand for dae …

Building Container Images with Podman and Buildah

This is the second in a series of blog posts on building Container Images. The series started with What is the Future of Container Image Building? whi …

Container Image Building with Makisu

This series of articles delves into the State of the Art of Container Image Building. We’ve already covered Podman and Buildah, Img, Kaniko, and this …