Beyond the Digital Climate Strike

by Ross Fairbanks on Sep 20, 2019

Today the global climate strike begins and we’re proud to be taking part in the digital climate strike. Displaying the banner on our websites with an overlay today and next Friday. We also have some team members taking part in the strike in various cities this Friday and next week too.

This is part of our journey to become a sustainable software company, which we wrote about during KubeCon Barcelona in May. But as we said then we don’t have all the answers and there is more we still need to do.

Travel

This is mainly the same as we wrote in our previous post. We need to travel to visit our customers and to engage with the cloud-native community at conferences and meetups. Often flying is the only practical option and since January 2019 we have been offsetting all flights for business travel with Atmosfair.

But one example is we held our most recent company onsite earlier this month near Rome, in comparison to Mallorca (an island) last year. The location was chosen so it was easier to access without flying. This meant team members from Germany and Spain could travel overland and also get to visit Milan, Turin, and Lyon!

Being a remote-first company continues to reduce our carbon footprint. It gives us flexibility about where we work and there is no mandatory commute to an office which would increase our carbon emissions.

Green Kubernetes

Kubernetes has autoscaling built-in with support for scaling both pods and nodes. This can reduce the amount of server capacity we need to provision as well as reducing costs.

On AWS all our tenant clusters have the upstream cluster-autoscaler for scaling nodes. This is pre-installed and managed by us. We also manage metrics-server which is needed for both the Horizontal and Vertical Pod Autoscalers. This is another thing our customers don’t need to worry about, and many of them use it for pod autoscaling.

Since Kubernetes provides an API for managing infrastructure the approach can be taken further. It’s still at the research stage but a team at the University of Bristol in the UK have developed a low carbon Kubernetes scheduler.

It works with the 4 main cloud providers and schedules workloads in regions where the electricity currently has the lowest carbon intensity. It does this by using public APIs exposed by the electricity grids in each country.

The approach is interesting but there are also significant challenges. It works well with workloads that can be interrupted like machine learning. However, these workloads often need large amounts of data that will not be in every region.

Energy Grid Flexibility

Grid Flexibility is one of the solutions identified by Project Drawdown, a project to research the solutions we need for the climate crisis. As we move more electricity generation to wind and solar the grid becomes less predictable and the need for energy storage increases.

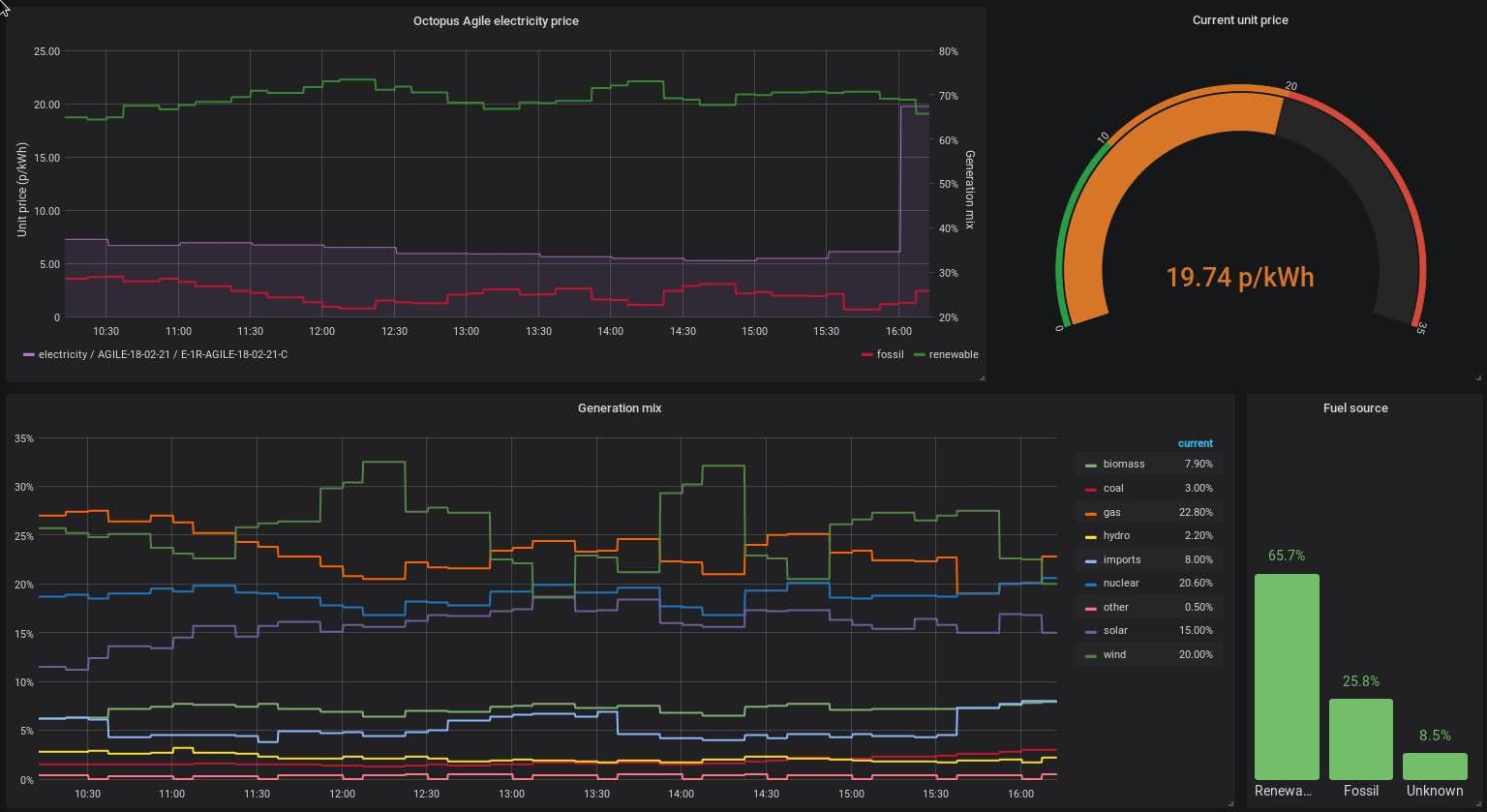

This grid flexibility will require software and public APIs like the ones used by the low carbon scheduler. Simon, one of our SREs in the UK has been using this with his local electricity supplier. The data is exported to Prometheus and a Grafana dashboard shows the current energy mix.

More @grafana goodness thanks to the Carbon Intensity API and @octopus_energy 🐙.

— Simon Weald (@glitchcrab) September 5, 2019

Of course, we’d like this software to run on Kubernetes. It may also need to run near the generation and storage physical infrastructure. We’re already seeing Kubernetes deployed at the edge in shops and factories and this could be another use case.

Data Centers

But back to the now and the core of what we do. It starts with our mission statement in which we state that “We give companies freedom to build the software that runs our world”.

That software runs on servers and those servers use electricity. We manage Kubernetes clusters for our customers on AWS, Azure, and on-premises.

We don’t manage Kubernetes on Google Cloud but credit should go there. Google Cloud match the energy their data centers consume with energy from sustainable sources. They also use their expertise in machine learning and apply it to how they manage their data centers.

On-Premises

Our on-premises testing infrastructure runs virtualized through Gridscale. The datacenter behind our machines there is run by interxion, which runs on 100% renewable energy.

Azure

All regions on Azure are carbon neutral which is achieved via offsetting. We run our own website and docs site here as part of “eating our own dogfood”. We also run test infrastructure and automated integration tests on Azure.

Offsetting is good but there have also been problems found with several offsetting schemes. So we’d like to see Azure provisioning sustainable energy directly like the approach used by Google Cloud.

AWS

We run most of our test infrastructure and automated integration tests in eu-central-1 in Frankfurt. This as well as Dublin, Oregon, and Canada are the 4 AWS regions that are carbon neutral. We also run a test control plane in cn-north-1 in Beijing which isn’t carbon neutral. We keep our tests there to a minimum.

However, this is just our infrastructure. We manage infrastructure for our customers in several regions that are not carbon neutral. AWS have a goal of being 100% sustainable but there is no date on this commitment.

This is why Timo our CTO is one of the signatories to this open letter to Andy Jassy the CEO of AWS. The letter asks AWS to:

-

Commit to a roadmap for all global AWS infrastructure to be 100% renewable by 2024 or earlier without using carbon offsetting.

-

Provide transparency about the energy sources of ‘sustainable’ AWS regions through independent auditing.

-

Provide a simple way to see our organizations’ CO2 emissions from existing AWS services by April 2020.

Conclusion

Climate change is a big issue for every inhabitant of this earth. Many individuals and even companies think that this problem is too big to be solved by “little me”. As you have read above there is a lot that can be done. All of the points brought up above are what we, as a 50 person company, working in the cloud-native environment can do. Many of these initiatives were brought to the attention of the company by individual employees that care. You are never too small to make a difference.

You May Also Like

These Related Stories

When a software company ponders the environment

Giant Swarm is a small company with big ideals. It starts with our mission statement in which we state that “We give companies freedom to build the so …

Autoscaling Kubernetes clusters

We've been looking into autoscaling in a Kubernetes environment, and in previous articles on the subject, we've seen how Kubernetes handles horizontal …

Self Driving Clusters - Managed Autoscaling Kubernetes on AWS

Giant Swarm provides managed Kubernetes clusters for our customers, which are operated 24/7 by our operations team. Each customer has their own privat …