Application Configuration Management with Helm

by Puja Abbassi on May 25, 2020

Following on from our introductory article on application configuration management in Kubernetes, this article looks at the topic from a Helm perspective.

Helm is an open-source project that describes itself as "The Package Manager for Kubernetes". It's a graduated project of the Cloud Native Computing Foundation (CNCF), and dates back to late 2015. This means that it has almost grown up in lockstep with Kubernetes itself. A testament to this fact is that Helm has a symbiotic relationship with Kubernetes, and is generally considered as its de facto mechanism for managing applications. In fact, if you have an application that you want to make available to would-be consumers, then you'd best have a Helm Chart for people to make use of. Even better, get it published on the public Helm Hub repository.

What is Helm?

Helm describes itself as a package manager, similar in concept to Apt or Yum on Linux, Homebrew on macOS, or Chocolatey on the Windows platform. In this sense, Helm allows you to bundle up a related set of YAML definitions for a Kubernetes application, into a composable, distributable unit called a "chart". In addition, it enables you to install, upgrade, and rollback different versions of applications (releases) that you want to deploy to a Kubernetes cluster. It even allows you to define the dependencies on other charts that need to be satisfied in order for the application to be deployed successfully.

These features are all extremely useful, but perhaps its biggest benefit is found in its ability to nuance the configuration of applications at the point of deployment. Applications are frequently configured to suit a specific purpose, and the subtle differences in configuration provide a more utilitarian experience. This is a difficult nut to crack for application providers, so let's see how Helm goes about providing this flexibility.

Helm Templating

At the heart of Helm's flexible packaging technique is its templating facility. Instead of providing YAML definitions with immutable values, chart authors can use a templating syntax for values that are considered customizable on deployment. Before installation, Helm renders the resource definition by inserting specific values into the resource's manifest in place of the template syntax. The values are read from a default, or a user-provided values.yaml file, or taken from the Helm command line itself.

apiVersion: apps/v1

kind: Deployment

metadata:

<snip> ...

spec:

<snip> ...

template:

<snip> ...

spec:

containers:

- name: {{ .Chart.Name }}

image:"{{ .Values.grafana.image.repository }}:{{ .Values.grafana.image.tag }}"

<snip> ...

So, the snippet of the Helm template provided above, allows us to provide part of a generic definition of an application, whilst allowing us to defer specifying the precise container image to use until time of deployment. If you don't like the default values, alternative values for the container image repository and tag can be provided in a custom `values.yaml` file.

This is fantastic! The templating language, which is largely based on the Go programming language's text/template syntax, even provides control logic. Chart authors can use conditional flow syntax, as well as iteration using its `range` operator. With this power and flexibility at the fingertips of chart authors, what could possibly go wrong with packaging cloud-native applications with Helm?

Templating Challenges

Clearly, solving the application configuration management problem in Kubernetes relies on having a credible technical solution. Helm has this. But, perhaps of equal importance, is that the technical solution needs to be practicable.

If the solution can't be used simply and effectively, the chances are it won't get used at all.

Or, it will give rise to a multitude of alternative solutions that seek to solve the same problem. Unfortunately, this is where we are with configuration management in Helm, which has triggered a splintered approach in the community. So, what exactly are the problems people are grappling with in Helm usage?

Readability

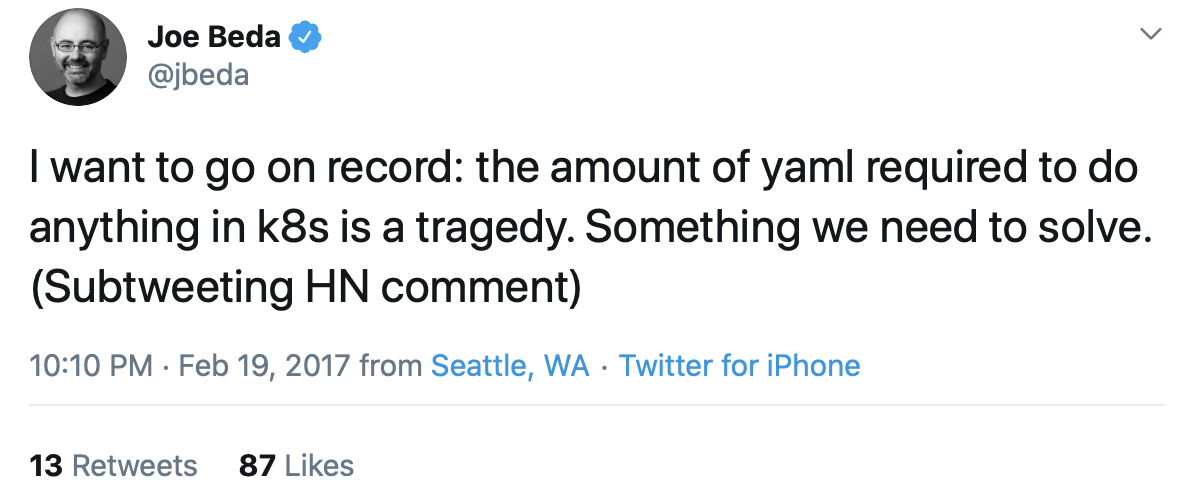

Perhaps, with good reason, people in the Kubernetes community are prone to complaining about how much YAML syntax they have to create, digest and maintain! But, imagine if the multitude of YAML Kubernetes API resource definitions you deal with, are also littered with Golang text/template syntax. In fact, imagine that virtually every line of YAML is customizable using Helm template code. This is the case for most authored Helm charts, including the chart for the community-maintained Nginx ingress controller, for example.

That's a lot of YAML, but also a lot of template code. And, whilst YAML is relatively easy on the eye, the same can't be said for Golang text/template syntax — it tends to make your eyes bleed.

The mix of YAML and templating language in one definition makes those definitions very verbose, difficult to read and maintain, and often prone to syntactical error. Add to this, an unfamiliarity with the rather flat, terse syntax of the templating language, and it's easy to see why people don't fall in love with Helm templating.

Language Limitations

It's not just the aesthetics and verbosity of the templating language that present a challenge either. A templating language is good for, well, templating. But, in Helm it feels as if the use case has outstripped the language's capabilities. This is exemplified by Helm's use of the extended template functions provided by the Sprig library. So, why was it chosen?

In the beginning, the Helm project envisaged support for multiple templating languages; Golang text/template, Jinja, and even Python. However, supporting multiple templating languages introduced more problems than it solved, and the community soon fell back on the Golang text/template solution. Helm is written in Golang, as are many other cloud-native tools, including Kubernetes. For that reason, it was a relatively easy choice to make. However, the Helm project's flirtations with different templating language solutions hasn't stopped at Golang text/templates. As we'll expand on later in the article, the big migration from Helm v2 to v3 intended to add Lua scripting into the templating mix. It didn't make it into the initial cut for v3.

Whilst the Helm templating language (with its extensions) is quite powerful, it has some shortcomings when considered for its intended purpose. The language is used to define YAML, yet it has no built-in knowledge of YAML, and works with strings rather than structured objects. This means chart developers need to be very wary when defining their templates.

For example, it remains the responsibility of the chart author to ensure that the values that replace the template syntax, are rendered appropriately (e.g. strings are rendered within quotes, where required).

<snip>

spec:

selector:

matchLabels:

app: {{ template "myapp.fullname" . }}

{{- if .Values.agents.podLabels }}

{{ toYaml .Values.agents.podLabels | indent 6 }}

{{- end }}

template:

<snip>

YAML is also notoriously sensitive about whitespace, and because the templating language has no notion of this sensitivity, it can't automatically provide the correct indentation for the resource fields. Once again, it's up to the chart author to get to grips with 'whitespace chomping', or to define explicit indentation within the template.

These are just a couple of the more obvious limitations, but there are many more. Whilst these limitations don't prevent the creation of comprehensive, flexible packaged charts for Cloud Native applications, they do provide a barrier to people just getting started.

Chart Consumption

If you have free rein over the content of a Helm chart, you have the freedom to make the application and its deployment parameters as configurable as you need. All it takes is some time investment to get familiar with chart structures and the templating language. But, if you're consuming someone else's chart, you're entirely reliant on the content the author provides. If a resource field isn't templated, you can't override its value! If the chart author has taken the time to consider all the ways her chart may get consumed, this problem may never occur. If it does, you may well end up having to fork the chart to amend and maintain a copy for your own purposes. This, of course, introduces an overhead to the chart consumer. Not only do they have to maintain their own version of the chart, but they also need to be aware of evolving upstream changes.

These issues of practical chart consumption affect many organizations, including us at Giant Swarm.

We have a general requirement to host container images in registries according to region, yet no upstream charts parameterize the registry component of image names. They are all hard-coded for a specific image registry. Similarly, we frequently find charts with immutable pod security configurations, which contravene our security stance defined in our default PodSecurityPolicies. These real-world examples exemplify the brittle nature of chart templating as it stands today.

Instead of forking a chart, another approach might be to use Helm's post rendering feature, which was made available in v3.1. The notion here is that Helm outputs a set of rendered YAML resource definitions on STDOUT, which are read (on STDIN) by another third-party transformation utility. In turn, the utility writes its transformed YAML to STDOUT. This allows the upstream templated charts to remain intact, whilst handing off the desired customization to something more suitable for the task (e.g. Kustomize). The whole process is managed by the Helm CLI using its ‘--post-renderer’ flag. Provided it's managed carefully, this can be a pragmatic solution, but it's not an optimal experience.

Is the Future Brighter for Helm?

It's safe to say that the problems that we've briefly discussed, have already been widely recognized within the Helm community. The recent transition from Helm v2 to v3 (which Giant Swarm is currently building migration automation for) fixed some very big, unrelated problems in Helm. For example, Helm v3 removes the dependency on Helm’s server side component, Tiller, which had a permissive default configuration. Its removal also reduces complexity, and the overhead of having to manage Helm-specific in-cluster components. The big step change also paved the way for addressing the templating issues.

Whilst the existing templating mechanism will remain in situ for backwards compatibility (a huge investment has already been made by chart developers), in the future, Helm will introduce Lua scripting into the mix. Those that wish to persevere with the existing templating mechanism will continue to be supported, whilst those looking for a more sophisticated experience, can make use of Lua scripts instead. The downside is that this more advanced templating capability has yet to see the light of day, whilst a number of other perceived deficiencies live on in the Helm experience.

Conclusion

Let's not knock Helm too much here. After all, it is the de facto mechanism for installing applications in Kubernetes, is widely used in the community, and has a lot else to offer (e.g. package provenance and integrity). In fact, its status and adoption hold it in good stead for the future, provided it delivers a more flexible and pragmatic approach to application configuration management.

In the meantime, its deficiencies mean that it competes with a growing army of alternative solutions to application configuration management, some of which we'll discuss in upcoming articles.

Next in the series, we'll lift the lid on Kustomize.

You May Also Like

These Related Stories

Application Configuration Management with Kustomize

In this series of articles, we're taking an in-depth look at application configuration management in Kubernetes. In the previous article, we explored …

Application Configuration Management with Kapitan

If you've been following along with this series on application configuration management in Kubernetes, then you'll already have an understanding of so …

What you Yaml is What you get - Using Helm without Tiller

When starting with Kubernetes, learning how to write manifests and bringing them to the apiserver is usually the first step. Most probably kubectl app …